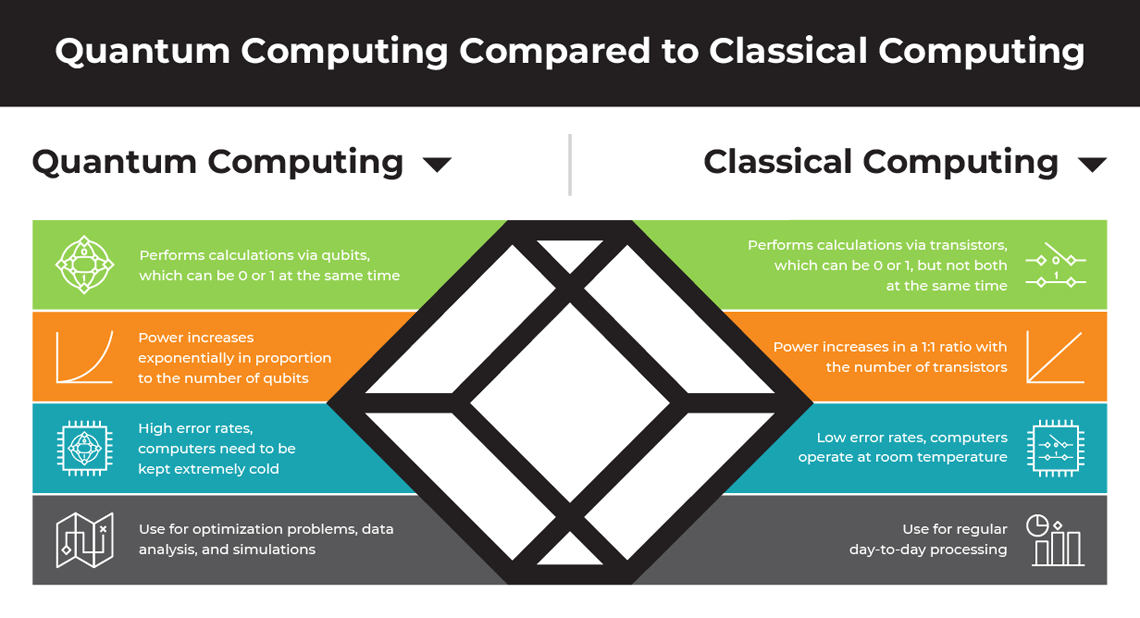

The emergence of quantum computing as an IT buzzword leads to many questions. How is it different from ordinary computing? Quantum computing differs from ordinary (classical) computing in a number of ways as illustrated in the infographic below.

Quantum Computing, using the principles of quantum mechanics, enables the processing of vast amounts of data at speeds unattainable by Classical Computing. The computational power of Quantum Computing enhances Artificial Intelligence (AI), which focuses on creating intelligent algorithms and systems that mimic human cognition. This immense processing capability can expedite AI's data analysis and learning processes, leading to more advanced and efficient AI systems.

Quantum computing uses quantum mechanics to perform operations on data. It differs from classical computing by using qubits, which can be in multiple states simultaneously, unlike traditional bits. This allows quantum computers to solve complex problems faster than classical computers. For example, they excel in data processing and simulations, crucial for medical and chemical studies.

Despite its potential, quantum computing faces significant challenges. The qubit's fragile state and the brief duration of quantum states limit the technology's current capabilities. Quantum computing requires extremely cold temperatures, as sub-atomic particles must be as close as possible to a stationary state to be measured. Also, quantum computers lack real-time control capabilities, a function still reliant on classical computers.

Quantum Computing had its theoretical foundations laid in 1985 by David Deutsch of the University of Oxford, who described the construction of quantum logic gates for a universal quantum computer.1 The first quantum computer was built in 1998. This marked a significant milestone in the field of quantum computing, showcasing the practical realization of theoretical concepts that had been developed over previous decades.

Today, as the next frontier after AI, big data, and machine learning, quantum computing is poised to revolutionize various sectors, including healthcare, finance, and data security.

It's expected that by the 2030s, quantum computers will be much better than supercomputers today and may start seeing mass use. As we approach the 2040s, they could become more accessible to consumers.2 Quantum computing’s ability to process vast amounts of data at unprecedented speeds holds the promise of groundbreaking advancements in various fields. As we embrace this new era, it's crucial to stay informed and prepared for the quantum future that lies ahead.

References:

1.https://www.britannica.com/technology/quantum-computer

2.https://www.rinf.tech/how-to-overcome-quantum-computing-mass-adoption-challenges/